An operating system is, conceptually, a layer of software residing just above the hardware and below all other software layers. It hides the intricate details of hardware resources like processor, memory, secondary storage and input and output devices and provides corresponding simple, intuitive, abstract resources. An operating system is also a resource manager, managing the hardware resources of a computer.

Table of Contents

1. A Computer System

A computer system comprises of hardware and software. A very important software in a computer system is its operating system. Conceptually, as shown in the figure above, an operating system is a layer of software that resides just over the hardware. There might be more layers of system and application software on top of the operating system. The operating system hides the complexity of hardware and presents a intuitive, coherent, easy to use interface for using the computer. Most of the times, the term operating system refers to the operating system kernel, which encompasses the hardware and provides the system call application programming interface (API) to the software layers coming after it. Then, there are system programs for jobs like running a program on the computer, creating and managing files, taking backups, etc. which are often clubbed with the kernel to make the operating system. But there is nothing magical about these system programs and these can always be written using the system calls by anyone. In the rest of this post, we will refer to the kernel when we use the term “operating system”.

In the figure shown above, the kernel is the only truly distinct layer on top of the hardware. The system programs and libraries share a fuzzy boundary with outer software layers. In fact, some higher level software layers might implement their own lower level layers using the kernel’s system call interface.

An operating system does two things. The first is resource abstraction. The operating system hides the intricate details of hardware from the higher layer software and presents a nice, elegant and easy to use interface in terms of system calls, the API for using the computer. Second, it is the resource manager for the computer. It combines and uses the hardware resources in such a way that objectives like “response time should be less” and/or “the throughput of the computer should be high” are met to the maximum extent possible.

2. Resource Abstraction

Operating systems provide abstraction of hardware resources for higher layer software programs. Hardware resources like processor, main memory, communication devices, persistent storage devices, etc are inherently complex. It is practically impossible for application programmers to write programs which implement the application logic and also to generate instructions for exercising the hardware devices. The operating system hides the intricate hardware details and provides simple, intuitive and easy to use abstractions. These abstractions are further refined and provided as the system call API, which can be used by higher layer application programs. Effectively, the operating system provides a virtual machine which appears to be running just the operating system (kernel and system programs) to higher level application software programs.

3. Process

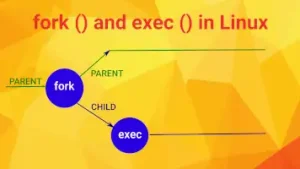

The job of a computer is to run programs. The operating system provides an abstraction named “process”, which runs a program. There are a number of “live” processes in the operating system, which appear to be progressing concurrently at any time. The fork system call creates a process. Then, there is the exec family of calls, using which we can make a process execute a given program. Multiple concurrent process can execute the same program using an exec call. However, these processes have no relationship with one another. Each process has independent address space, comprising of code, data and system data segments. The operating system provides calls for communication between processes and for synchronization of processes.

By default, a process has a single thread of execution. There is the pthreads library which can be used for having multiple threads of execution for a process. These threads share the data segment of the underlying process but have independent stacks. pthreads also provides calls for synchronization of threads.

4. Virtual memory

A computer has physical main memory, which is simply a sequence of bytes. The operating system provides virtual memory which is much larger than the physical memory. The operating system uses secondary storage along with the main memory and “intelligently” shuffles parts of main memory to secondary storage and vice-versa to keep the operations going. Each process gets its private address space which is protected from access by other processes. The addresses in the virtual address space are virtual addresses. The programs work using the virtual addresses. The virtual addresses are converted into physical addresses during execution by the memory management unit. Since the virtual address space is bigger than the physical memory and there are multiple processes resident in the memory at any time, there would be parts of the processes’ address space that are not resident in the main memory at that time. So, while executing an instruction, the memory management unit might realize that a required operand is not in the physical memory and the concerned part of the memory needs to loaded from the secondary storage to the main memory to execute the instruction. A trap, similar to an interrupt, is generated and the operating system loads the relevant data from the secondary storage to the main memory and the instruction is executed.

The advantages of virtual memory are that a process gets a large, private and protected address space and the program can use virtual addresses for accessing memory locations. The operating system and the memory management unit work together to make this possible.

5. Concurrency

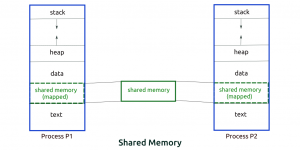

Concurrency is the capability of doing multiple things at the same time. In everyday life there are many many things that happen at the same time. For example, think about a weather application running on a computer. It might have a process monitoring the temperature, a second process monitoring the wind speed, and a third process monitoring the air quality. There might be a “manager” process that monitors these processes, records their observations and accepts and processes user commands. The kernel provides for multiple concurrent processes and these processes run concurrently. The environment monitoring processes might need to communicate with the manager process. The operating system provides mechanisms like pipe, fifos, message queues and shared memory for inter-process communication. Also, the processes might need synchronization among themselves; for example, only one process should be able to write data to the central store at a time. Also, we might need a process to get activated under certain conditions. The operating system provides semaphores for these synchronization requirements.

By default, each process has a single thread of execution. We might need multiple threads of execution in some processes. For example the manager process might have one thread for communication with environment monitoring processes, and a second thread for processing user commands. The operating system supports multiple threads in a process. The threads of a process share the process’s global data. So, communication is not a problem. But availability of global data to all threads of the process at all times creates its own problems. For example, there might be critical data that can only be accessed by one thread at a time. So, threads need to be synchronized. The operating system provides the mutex object, which is short for mutual exclusion, and using which a thread can access a critical section (data or code) exclusively. The operating system also provides condition variables, which help a thread in waiting for certain conditions becoming true.

6. File System

Computers need to to store data. The data in the main memory, RAM, is lost when the power is switched off. So it is important that computers store data which is persistent, that is, it stays permanent across system boot and shutdown cycles. It is also important that data is easily available and is usable by the users. Traditionally, data has been stored on the hard disk in the system. Now-a-days solid-state drives (SSDs) are becoming increasingly common and provide a faster, reliable and compact medium for persistent storage. These devices storing data permanently are known as secondary storage devices.

Operating systems provide an important abstraction called the file system. A file system is a structure used by the operating system for organizing and managing files on a secondary storage device. A file stores data. There are three kinds of files, viz., simple files, directories and special files. A simple file simply stores data. It is a sequence of bytes. A directory, also called a folder, contains files. It can contain all types of files: ordinary files, directories and special files. A directory provides a mapping between file names and the files themselves much like the pointers pointing to actual data in programming languages. A directory establishes a tree structure in a file system. All directories in a file system have a parent directory, except the top directory called root and denoted by “/”. Special files are mostly devices, named pipes or fifos, sockets, etc.

7. Input and Output

Computers need to interact with the external world; they need to take inputs and give outputs. So, a computer is connected to multiple I/O devices. I/O devices are complex. A computer has controllers for each type of I/O device. The devices are connected to the computer via controllers. The controllers are controlled by device drivers, which are a part of the operating system kernel. The controllers are quite involved and there is a device driver for each controller. To do an I/O operation, a process makes a system call like “read” or “write”, the control comes to kernel, where the relevant device driver gets into operation. The device driver communicates with the relevant controller and the I/O operation is done. This is a much simplified view; the actual process is a lot more complicated. The device driver takes care of all the controller complexity and a user program can use abstractions like read, write, print calls to do the I/O. Similarly, for communications over the network interface, the operating system provides the abstraction called “socket”. Processes can communicate over the network via the socket interface, using protocols like the User Datagram Protocol (UDP) or the Transmission Control Protocol (TCP), which are also parts of the operating system.

8. Resource Manager

The foregoing discussion of viewing the operating system as a virtual machine is essentially a top-down viewpoint. And, there is a bottom-up point of view as well. An operating system is a resource manager. Each of the hardware building blocks, like the CPU, memory, secondary storage, I/O devices, is a resource and needs to be used in an optimal way in order to meet certain objectives. These objectives can be like good response time for users, maximizing the throughput, fair allocation of resources ensuring that there is no starvation of a resource for any user (a user is, generally, a process), ensuring a good predetermined network speed, etc.

The resources in a computer system are scarce and the demand is high. For example, a system might have a single processor and a hundred-odd processes, out of which, say, a 10-odd might be ready to run and vie for the processor at a time. The operating system must choose one out of these ten processes to use the processor next. These 10-odd processes form the “ready queue”, the queue of processes ready to run. How is this queue ordered? It is a matter of policy. The queue might be ordered first-in first-out and the operating system might take the process at the head of the queue out, run it for a fixed time slice or less if the process relinquishes the CPU earlier voluntarily. Then, it it is still ready to run, it could be placed at the end of the “ready to run” queue. The operating system is essentially multiplexing the CPU between multiple processes which are ready to run. Here, the CPU is time multiplexed as each process in the queue uses it for the time slice or less.

Instead of time multiplexing, a resource might be space multiplexed. That is, instead of processes taking tuns of using the resource, a part of the resource could be given to each process. This happens in the case of virtual memory. A part of the main memory is given to each process so that it partly stays in the main memory and the rest of the process’s address space is kept on the secondary storage. This is managed entirely by the memory management unit and the operating system; the process is only aware of its virtual address space. Similarly, the hard disk is space multiplexed. A small part of the hard disk resource is given to each user. The operating system keeps track of the location of files and it also manages the free space, that is, it knows where to write data, when a process wants to do a write operation on it.

9. Conclusion

An operating system provides abstraction of hardware resources, making it easier to build application programs. An operating system is also a resource manager, managing the computer hardware resources and meeting overall system objectives.